Appendix A Fourier Transforms

A.1 The Fourier Transform

The continuous Fourier transform is important in mathematics, engineering, and the physical sciences. Its counterpart for discretely sampled functions is the discrete Fourier transform (DFT), which is normally computed using the so-called fast Fourier transform (FFT). The DFT has revolutionized modern society, as it is ubiquitous in digital electronics and signal processing. Radio astronomers are particularly avid users of Fourier transforms because Fourier transforms are key components in data processing (e.g., periodicity searches) and instruments (e.g., antennas, receivers, spectrometers), and they are the cornerstones of interferometry and aperture synthesis. Useful references include Bracewell [15] (which is on the shelves of most radio astronomers) and the Wikipedia99http://en.wikipedia.org/wiki/Fourier_transform. and Mathworld1010http://mathworld.wolfram.com/FourierTransform.html. entries for the Fourier transform.

The Fourier transform is a reversible, linear transform with many important properties. Any complex function of the real variable that is both integrable and contains only finite discontinuities has a complex Fourier transform of the real variable , where the product of and is dimensionless and unity. For example, the Fourier transform of the waveform expressed as a time-domain signal is the spectrum expressed as a frequency-domain signal. If is given in seconds of time, is in .

The Fourier transform of is defined by

| (A.1) |

which is usually known as the forward transform, and

| (A.2) |

which is the inverse transform. In both cases, . Alternative definitions of the Fourier transform are based on angular frequency , have different normalizations, or the opposite sign convention in the complex exponential. Successive forward and reverse transforms return the original function, so the Fourier transform is cyclic and reversible. The symmetric symbol is often used to mean “is the Fourier transform of”; e.g., .

The complex exponential (Appendix B.3) is the heart of the transform. A complex exponential is simply a complex number where both the real and imaginary parts are sinusoids. The exact relation is called Euler’s formula

| (A.3) |

which leads to the famous (and beautiful) identity that relates five of the most important numbers in mathematics. Complex exponentials are much easier to manipulate than trigonometric functions, and they provide a compact notation for dealing with sinusoids of arbitrary phase, which form the basis of the Fourier transform.

Complex exponentials (or sines and cosines) are periodic functions, and the set of complex exponentials is complete and orthogonal. Thus the Fourier transform can represent any piecewise continuous function and minimizes the least-square error between the function and its representation. There exist other complete and orthogonal sets of periodic functions; for example, Walsh functions1111http://en.wikipedia.org/wiki/Walsh_function (square waves) are useful for digital electronics. Why do we always encounter complex exponentials when solving physical problems? Why are monochromatic waves sinusoidal, and not periodic trains of square waves or triangular waves? The reason is that the derivatives of complex exponentials are just rescaled complex exponentials. In other words, the complex exponentials are the eigenfunctions of the differential operator. Most physical systems obey linear differential equations. Thus an analog electronic filter will convert a sine wave into another sine wave having the same frequency (but not necessarily the same amplitude and phase), while a filtered square wave will not be a square wave. This property of complex exponentials makes the Fourier transform uniquely useful in fields ranging from radio propagation to quantum mechanics.

A.2 The Discrete Fourier Transform

The continuous Fourier transform converts a time-domain signal of infinite duration into a continuous spectrum composed of an infinite number of sinusoids. In astronomical observations we deal with signals that are discretely sampled, usually at constant intervals, and of finite duration or periodic. For such data, only a finite number of sinusoids is needed and the discrete Fourier transform (DFT) is appropriate. For almost every Fourier transform theorem or property, there is a related theorem or property for the DFT. The DFT of data points (where ) sampled at uniform intervals and its inverse are defined by

| (A.4) |

and

| (A.5) |

Once again, sign and normalization conventions may vary, but this definition is the most common. The continuous variable has been replaced by the discrete variable (usually an integer) .

The DFT of an -point input time series is an -point frequency spectrum, with Fourier frequencies ranging from , through the 0-frequency or so-called DC component, and up to the highest Fourier frequency . Each bin number represents the integer number of sinusoidal periods present in the time series. The amplitudes and phases represent the amplitudes and phases of those sinusoids. In summary, each bin can be described by .

For real-valued input data, the resulting DFT is hermitian—the real part of the spectrum is an even function and the imaginary part is odd, such that , where the bar represents complex conjugation. This means that all of the “negative” Fourier frequencies provide no new information. Both the and bins are real valued, and there is a total of Fourier bins, so the total number of independent pieces of information (i.e., real and complex parts) is , just as for the input time series. No information is created or destroyed by the DFT.

Other symmetries existing between time- and frequency-domain signals are shown in Table A.1.

| Time domain | Frequency domain |

|---|---|

| real | hermitian (real=even, imag=odd) |

| imaginary | anti-hermitian (real=odd, imag=even) |

| even | even |

| odd | odd |

| real and even | real and even (i.e., cosine transform) |

| real and odd | imaginary and odd (i.e., sine transform) |

| imaginary and even | imaginary and even |

| imaginary and odd | real and odd |

Usually the DFT is computed by a very clever (and truly revolutionary) algorithm known as the fast Fourier transform (FFT). The FFT was discovered by Gauss in 1805 and re-discovered many times since, but most people attribute its modern incarnation to James W. Cooley and John W. Tukey [32] in 1965. The key advantage of the FFT over the DFT is that the operational complexity decreases from for a DFT to for the FFT. Modern implementations of the FFT1212http://www.fftw.org. allow complexity for any value of , not just those that are powers of two or the products of only small primes.

A.3 The Sampling Theorem

Much of modern radio astronomy is now based on digital signal processing (DSP), which relies on continuous radio waves being accurately represented by a series of discrete digital samples of those waves. An amazing theorem which underpins DSP and has strong implications for information theory is known as the Nyquist–Shannon theorem or the sampling theorem. It states that any bandwidth-limited (or band-limited) continuous function confined within the frequency range may be reconstructed exactly from uniformly spaced samples separated in time by . The critical sampling rate is known as the Nyquist rate, and the spacing between samples must satisfy seconds. A Nyquist-sampled time series contains all the information of the original continuous signal, and because the DFT is a reversible linear transform, the DFT of that time series contains all of the information as well.

Sampling Theorem Examples. The (young and undamaged) human ear can hear sounds with frequency components up to kHz. Therefore, nearly perfect audio recording systems must sample audio signals at Nyquist frequencies . Audio CDs are sampled at 44.1 kHz to give imperfect lowpass audio filters a 2 kHz buffer to remove higher frequencies which would otherwise be aliased into the audible band. A visual example of an aliased signal is seen in movies where the 24 frame-per-second rate of the movie camera performs “stroboscopic” sampling of a rotating wagon wheel with uniformly spaced spokes. When the rotation frequency of the wheel is below the Nyquist rate (), the wheel appears to be turning at the correct rate and in the correct direction. When it is rotating faster than but slower than , it appears to be rotating backward and at a slower rate. As the rotation rate approaches , the wheel apparently slows down and then stops when the rotation rate equals twice the Nyquist rate.The frequency corresponding to the sampled bandwidth, which is also the maximum frequency in a DFT of the Nyquist-sampled signal of length , is known as the Nyquist frequency,

| (A.6) |

The Nyquist frequency describes the high-frequency cut-off of the system doing the sampling, and is therefore a property of that system. Any frequencies present in the original signal at higher frequencies than the Nyquist frequency, meaning that the signal was either not properly Nyquist sampled or band limited, will be aliased to other lower frequencies in the sampled band as described below. No aliasing occurs for band-limited signals sampled at the Nyquist rate or higher.

In a DFT, where there are samples spanning a total time , the frequency resolution is . Each Fourier bin number represents exactly sinusoidal oscillations in the original data , and therefore a frequency in Hz. The Nyquist frequency corresponds to bin . If the signal is not bandwidth limited and higher-frequency components (with or Hz) exist, those frequencies will show up in the DFT shifted to lower frequencies , assuming that . Such aliasing can be avoided by filtering the input data to ensure that it is properly band limited.

Note that the Sampling theorem does not demand that the original continuous signal be a baseband signal, one whose band begins at zero frequency and continues to frequency . The signal can be in any frequency range to such that . Most radio receivers and instruments have a finite bandwidth centered at some high frequency such that the bottom of the band is not at zero frequency. They will either use the technique of heterodyning (Section 3.6.4) to mix the high-frequency band to baseband where it can then be Nyquist sampled or, alternatively, aliasing can be used as part of the sampling scheme. For example, a 1–2 GHz filtered band from a receiver could be mixed to baseband and sampled at 2 GHz, the Nyquist rate for that bandwidth; or the original signal could be sampled at 2 GHz and the 1 GHz bandwidth will be properly Nyquist sampled, but the band will be flipped in its frequency direction by aliasing. This is often called sampling in the “second Nyquist zone.” Higher Nyquist zones can be sampled as well, and the band direction flips with each successive zone (e.g., the band direction is normal in odd-numbered Nyquist zones and flipped in even-numbered zones) by aliasing.

A.4 The Power Spectrum

A useful quantity in astronomy is the power spectrum . The power spectrum preserves no phase information from the original function. Rayleigh’s theorem (sometimes called Plancherel’s theorem and related to Parseval’s theorem for Fourier series) shows that the integral of the power spectrum equals the integral of the squared modulus of the function (e.g., signal energies are equal in the frequency and time domains):

| (A.7) |

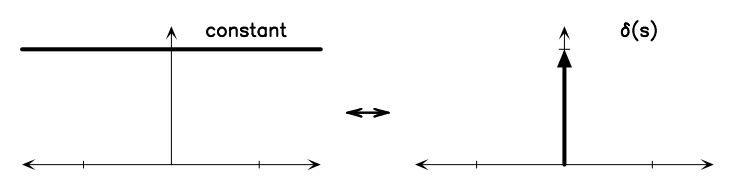

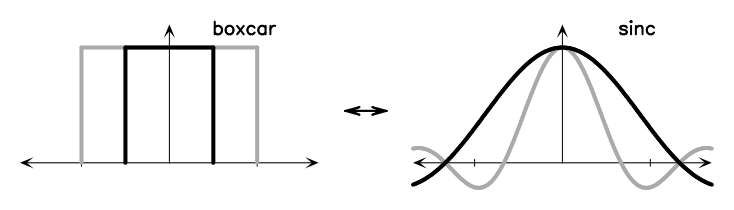

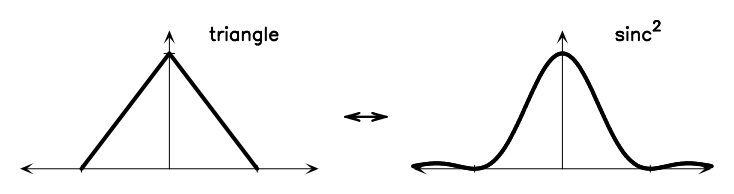

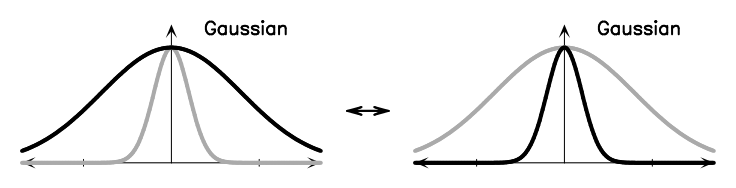

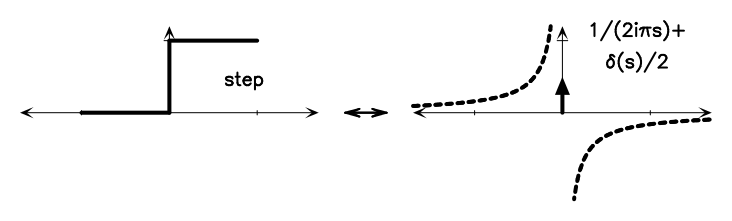

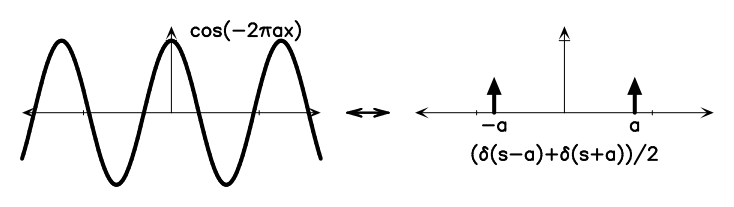

A.5 Basic Transforms

Figure A.1 shows some basic Fourier transform pairs. These can be combined using the Fourier transform theorems below to generate the Fourier transforms of many different functions. There is a nice Java applet1313http://webphysics.davidson.edu/Applets/mathapps/mathapps_fft.html. on the web that lets you experiment with various simple DFTs.

|

|

|

|

|

|

|

|

A.6 Basic Fourier Theorems

Addition Theorem. The Fourier transform of the sum of two functions and is the sum of their Fourier transforms and . This basic theorem follows from the linearity of the Fourier transform:

| (A.8) |

Likewise from linearity, if is a constant, then

| (A.9) |

Shift Theorem. A function shifted along the -axis by to become has the Fourier transform . The magnitude of the transform is the same, only the phases change:

| (A.10) |

Similarity Theorem. For a function with a Fourier transform , if the -axis is scaled by a constant so that we have , the Fourier transform becomes . In other words, a short, wide function in the time domain transforms to a tall, narrow function in the frequency domain, always conserving the area under the transform. This is the basis of the uncertainty principle in quantum mechanics and the diffraction limits of radio telescopes:

| (A.11) |

Modulation Theorem. The Fourier transform of the product is . This theorem is very important in radio astronomy as it describes how signals can be “mixed” to different intermediate frequencies (IFs):

| (A.12) |

Derivative Theorem. The Fourier transform of the derivative of a function , , is :

| (A.13) |

Differentiation in the time domain boosts high-frequency spectral components, attenuates low-frequency components, and eliminates the DC component altogether.

A.7 Convolution and Cross-Correlation

Convolution shows up in many aspects of astronomy, most notably in the point-source response of an imaging system and in interpolation. Convolution, which we will represent by (the symbol is also frequently used for convolution), multiplies one function by the time-reversed kernel function , shifts by some amount , and integrates from to . The convolution of the functions and is a linear functional defined by

| (A.14) |

In Figure A.2, notice how the delta-function portion of the function produces an image of the kernel in the convolution. For a time series, that kernel defines the impulse response of the system. For an antenna or imaging system, the kernel is variously called the beam, the point-source response, or the point-spread function.

A very nice applet showing how convolution works is available online1414http://www.jhu.edu/~signals/convolve/index.html. (there is also a discrete version1515http://www.jhu.edu/~signals/discreteconv2/index.html.); a different visualization tool is also available. 1616https://maxwell.ict.griffith.edu.au/spl/Excalibar/Jtg/Conv.html.

The convolution theorem is extremely powerful and states that the Fourier transform of the convolution of two functions is the product of their individual Fourier transforms:

| (A.15) |

Cross-correlation is a very similar operation to convolution, except that the kernel is not time-reversed. Cross-correlation is used extensively in interferometry and aperture synthesis imaging, and is also used to perform optimal “matched filtering” of data to detect weak signals in noise. Cross-correlation is represented by the pentagram symbol and defined by

| (A.16) |

In this case, unlike for convolution, .

Closely related to the convolution theorem, the cross-correlation theorem states that the Fourier transform of the cross-correlation of two functions is equal to the product of the individual Fourier transforms, where one of them has been complex conjugated:

| (A.17) |

Autocorrelation is a special case of cross-correlation with . The related autocorrelation theorem is also known as the Wiener–Khinchin theorem and states

| (A.18) |

In words, the Fourier transform of an autocorrelation function is the power spectrum, or equivalently, the autocorrelation is the inverse Fourier transform of the power spectrum. Many radio-astronomy instruments compute power spectra using autocorrelations and this theorem. The following diagram summarizes the relations between a function, its Fourier transform, its autocorrelation, and its power spectrum:

One important thing to remember about convolution and correlation using DFTs is that they are cyclic with a period corresponding to the length of the longest component of the convolution or correlation. Unless this periodicity is taken into account (usually by zero-padding one of the input functions), the convolution or correlation will wrap around the ends and possibly “contaminate” the resulting function.

A.8 Other Fourier Transform Links

Of interest on the web, other Fourier-transform-related links include a Fourier series applet,1717http://www.jhu.edu/~signals/fourier2/index.html. some tones, harmonics, filtering, and sounds,1818http://www.jhu.edu/~signals/listen-new/listen-newindex.htm. and a nice online book on the mathematics of the DFT.1919http://ccrma.stanford.edu/~jos/mdft/mdft.html.